Manifesto

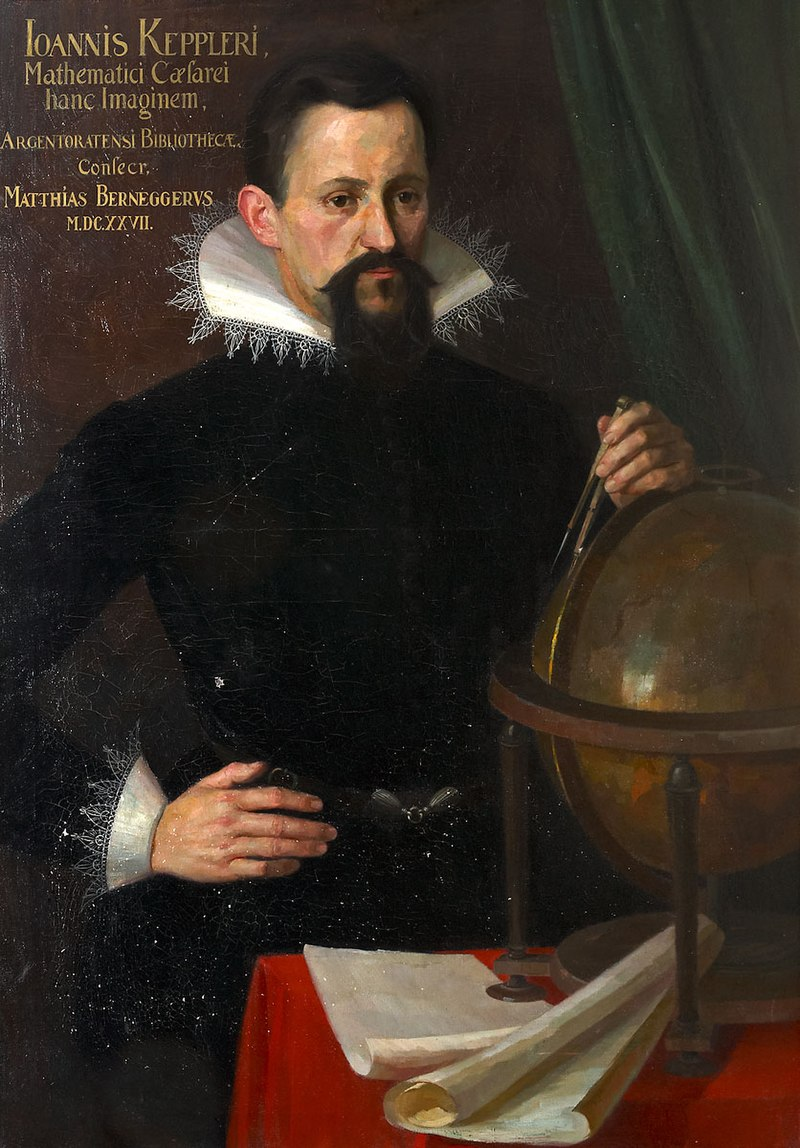

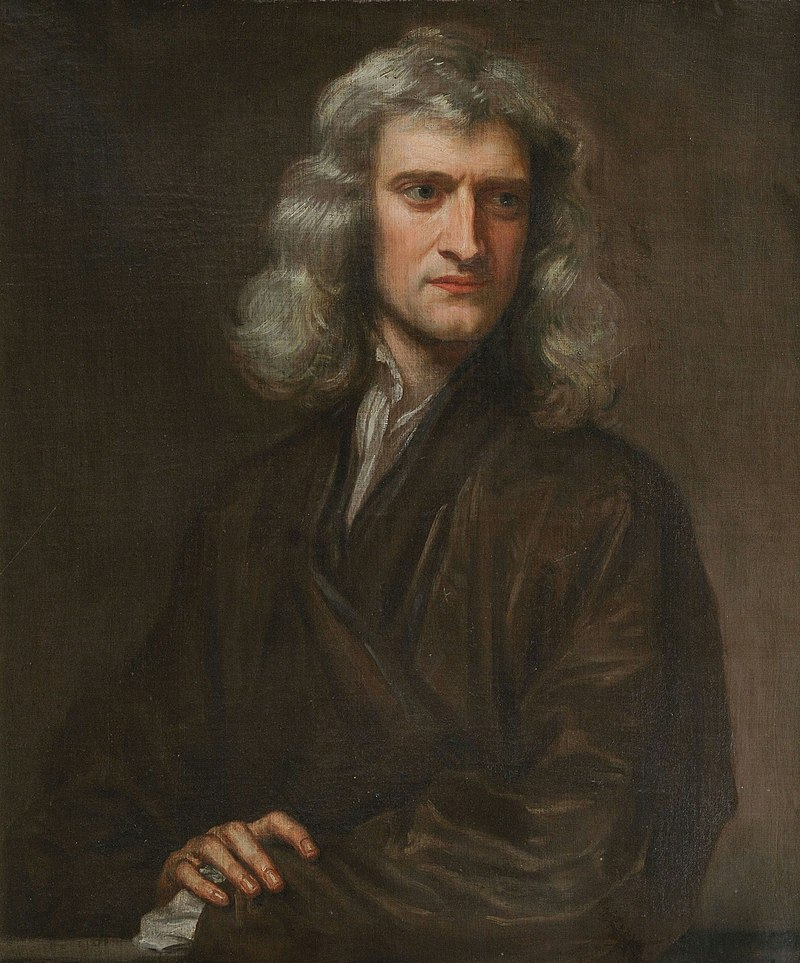

Ever since the time of Isaac Newton, there have been two different paradigms for scientific research: the Keplerian paradigm and the Newtonian paradigm.

The Keplerian paradigm, often referred to as the “data-driven” approach, expects to extract new physical rules or trends through data analysis and utilize these rules to solve actual problems. The discovery of Kepler’s laws of planetary motion was the canonical implementation of this paradigm. Nowadays, many successful examplesbioinformatics and cheminformatics have demonstrated the effectiveness of this paradigm in areas from multi-scale modeling, protein structure prediction, to drug discoveryincluding drug discovery or disease treatment.

The Newtonian paradigm is based on working from first principles, with the aim to figure out fundamental physical rules that govern the world as we know it. Based on these principles, scientists are able to explain most of the experimentally observed phenomenons. One of the most successful theories is quantum mechanics because it almost prepares us with all necessary laws for much of engineering and natural sciences. However, as pointed out by Dirac, “the exact application of these laws leads to equations much too complicated to be solved”. The central difficulty is called “the curse of dimensionality”, i.e., the problems we are encountered are actually too high-dimensional and cannot be solved efficiently. For a long time, natural scientists have only had limited ability to handle these equations with at most thousands of variables.

Machine learning, especially deep learning (or generally AI) techniques emerge as effective tools to approximate arbitrary high-dimensional functions as illustrated by its unprecedented success in computer vision (CV) and natural language processing (NLP). In the Newtonian paradigm, AI methods have been applied to incorporate physical laws to solve more much complicated problems or system simulations than toy examples. In the Keplerian paradigm, AI can be directly applied to analyze and learn from data in an end-to-end manner. With the promises of AI in solving real and challenging scientific problems, “AI for Science” (AI4Science) has become established as a new term and prevailed in both AI and scientific research communities. In the past few years, successful applications of AI methods have opened up a wide research avenue for both communities, from AlphaFold2 [1] that solves the 50-year-old protein structure prediction puzzle, DeePMD [2] that extending ab initio simulation to unprecedentedly large scales, to controlling nuclear reactor with AI agents [3]. The new paradigm of scientific research empowered by AI has been formed, and aforementioned successful examples have paved the way for this new paradigm. However, as scientific discovery has a very broad scope with many different disciplines many grand challenges that are critical to our lives still remain unsolved. Despite the early success, we have to acknowledge that AI for Science is still nascent and requires joint efforts from both AI and scientific communities.

We are living in an era with the opportunity and means to tackle grand challenges in scientific discovery. To facilitate this emergent field and bridge gaps between AI and scientific communities, this blog aims to equip researchers in the AI community with some basic scientific knowledge and an overview of new challenges in scientific discovery, which may appear significantly different from common AI application areas such as computer vision and speech recognition.